With AI having the ability to search the internet and write a paper, create an image, or even a video having someone say what they didn’t say, we can no longer use the phrase that seeing is believing. Today’s guest is Peter Sisson. As a serial entrepreneur with a master’s in AI from Stanford, Peter is working to fight fake news, fake reviews, and fake products. Misinformation and polarization have exploded since Peter began his career in Silicon Valley more than 20 years ago and his latest company CertifyIt offers an app that can help stop it.

“In five years, like everything in tech, this is going to get better and better, the resolution is going to get better, and the believability is going to be higher.” - Peter Sisson Share on XShow Notes:

- [1:08] – Peter shares his background in AI and as a serial entrepreneur.

- [3:01] – We believe what we see and it is already getting hard to determine what is real.

- [4:38] – The biggest concern is faking videos and faking images is already happening.

- [6:04] – The technology isn’t there yet and usually you can tell when an image or video is fabricated.

- [7:55] – AI has been trained by the entire internet. AI can write anything you want it to, but none of it is original.

- [9:18] – AI doesn’t actually know anything. It uses what is already available to learn patterns. Peter explains how it works.

- [11:25] – There are a lot of ethical concerns about using AI to create content.

- [12:51] – News providers use CertifyIt to know that content they release is accurate before they break fake news.

- [14:08] – Peter shares the story of CNN breaking news that wasn’t true but really appeared to be accurate.

- [16:16] – How does CertifyIt use metadata to confirm accuracy?

- [18:07] – Chris and Peter discuss some examples of how people need to use CertifyIt, which is a public benefit corporation.

- [21:01] – It is a simple concept, but it works and is a viable solution to ensure content is accurate.

- [22:25] – The sooner the video is determined to be true, it is news that can be broken faster.

- [23:46] – Fake reviews are a big problem right now where people are even hired to write them for companies or products.

- [26:21] – Certified location videos could be beneficial for certain products or services.

- [27:50] – A third party like CertifyIt is necessary as people won’t believe the vetting if it’s done by the news organization.

- [30:16] – You can trick people’s brains pretty easily as we are designed to believe what we see. This is especially easy if the viewer is tired or distracted.

- [31:50] – The more people get fooled, the more they will need a tool to vet the accuracy of a video they view.

- [33:29] – There will be false positives and false negatives as the technology gets better and better.

- [35:54] – The things that AI can create are truly very cool and can be entertaining, but the way people use it will change and probably not for the greater good.

- [38:21] – It will be possible to create alternate realities that are very convincing.

- [39:23] – It will probably take something major happening to bring this problem to light for the general public.

- [41:03] – The CertifyIt app can be used in a multitude of ways to provide a sense of confidence that the content you are viewing is true.

Thanks for joining us on Easy Prey. Be sure to subscribe to our podcast on iTunes and leave a nice review.

Links and Resources:

- Podcast Web Page

- Facebook Page

- whatismyipaddress.com

- Easy Prey on Instagram

- Easy Prey on Twitter

- Easy Prey on LinkedIn

- Easy Prey on YouTube

- Easy Prey on Pinterest

- CertifyIt Website

- CertifyIt on Apple App Store

Transcript:

Peter, thank you so much for coming on the Easy Prey Podcast today.

It's a pleasure to be here, Chris.

Can you give me and the audience a little bit of background about who you are and what you do?

Sure! I'm based in Silicon Valley. I am a serial entrepreneur. I've started four companies before this one. They all got acquired in some form. I wouldn't say they were all home runs. Investors certainly made money, sometimes I didn't.

My background before that was I studied AI. I have a master's in Artificial Intelligence from Stanford and an MBA from Tuck. Everything I learned at Stanford in the late 80s is no longer applicable. Neural networks were like a two-credit seminar, and now they're how machine learning is done.

I have started several companies, and I have a background in AI. I'm pretty much a product-focused entrepreneur and I'd like to solve problems with the software.

Gotcha. Let's talk a little bit about fake news. I suppose we need to clarify the definition of fake news here. We're not talking about where people are disagreeing, there's spin, or there's a viewpoint that someone disagrees with so it's qualified as fake but where literally fake content is created, what is being purported, what videos are being seen, never actually happened.

Yes. That's correct. That's the part that is the bigger threat which is where we're headed, these technologies are going to be so good that you basically can provide photographic evidence of you doing anything that you need to prove. Now that has implications. Seeing is believing. Our brains are wired to believe what we see, more so than what we hear.

These technologies are going to be so good that you basically can provide photographic evidence of you doing anything that you need to prove. Now that has implications. Seeing is believing. -Peter Sisson Share on XI think it's just going to be really hard to know what's real, and the fact that even an article is written by humans. It's going to be interesting. It's not necessarily bad but it could be, and there's just going to be so much to read. There's going to be so much content generated by these AI writers that it's going to be hard to really sort through it or know it’s real.

What do you see the current state of AI in terms of video production, deep fakes, and written content production?

The written content production is going great. Now, you can go into GPT-3 or ChatGPT, their latest release, and you just say, “I want you to write me a story about this, or I want an article that makes the case for that.” Any of these tools basically allow you to generate this content, and then that content could be real or it could be fake.

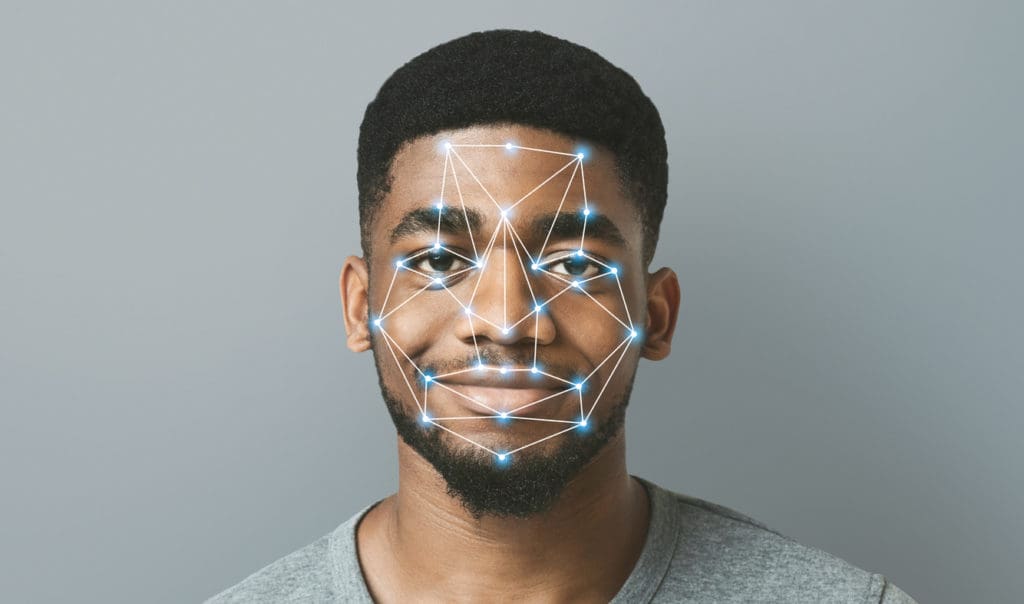

What we're really most concerned about at CertifyIt is video content because now that they can fake photos realistically, and they'll only get better, then faking videos is the next step because that's just a sequence of photos, right?

When we start seeing things that have been fabricated by computers and believe them to be real, that's when reality stops being real and people start not knowing what to believe anymore.

When we start seeing things that have been fabricated by computers and believe them to be real, that's when reality stops being real and people start not knowing what to believe anymore. -Peter Sisson Share on XI mean, we're already kind of getting there. But with these technologies, it's going to be so hard to tell that something wasn't made by a human, and that has implications for society, I think. That's really why CertifyIt was founded, which is to make sure that there's a way to know something is real, and in this case, a video.

Let's take a step back. When it comes to deep fake videos, how much work is required under today's methodologies to create videos that you and I might look at and say, “We can see artifacts. We could tell that that's not real”? How much work does it take and what are some of the things under today's technology? If you were to look at a video, you could identify as a less clearly deep fake.

Yeah, great question.

Technology has certainly come a long way. Right now it's not generating videos. The best it can do is static images. And if you look closely, you'll see in the detail, you can tell that it was fabricated. You'll look at the leaves of trees or you'll look at detail even in other humans or animals in the photo—you can tell that it was generated.

In five years, everything in tech is just going to get better and better, and the resolution is going to get higher, and the believability is going to get higher. My area of concern is that you can now reanimate someone and make them say something using computer software.

That's another potential problem. It's going mainstream that these tools that’s very sophisticated and weren't possible before, these tools for generating these images are open to everybody now.

I can go to OpenAI and sign up for free to do a number of images for free and then it's a small amount of money to pay to have the images generated. I can get access to GPT-3 and ChatGPT to generate research papers, articles, instructional manuals, stories, and paragraphs.

And whatever you want it to write, it will write it very convincingly because it's basically been trained on the entire internet. It can never be original because, in a sense, the AI is always the average of all of its input, and all of its training.

That's sort of the one interesting things: it's not going to invent stuff, because it can only build from what's already been done before, but a lot of great ideas are built from prior ideas. There's talk that it can't truly be original, but it can seem pretty original. In the way that it has such a command of the facts, and can pull them together into a detailed article in just a few seconds.

That's sort of the one interesting things: it's not going to invent stuff, because it can only build from what's already been done before, but a lot of great ideas are built from prior ideas. -Peter Sisson Share on XIn terms of doing it right, the challenge there is the source material. If there's a lot of erroneous data sources that your intelligence might be looking at, that it's just going to pull in factually incorrect information because it doesn't really know the material?

That's correct. It doesn't know anything. It's purely about the frequency of it happening on the web because these language models are trained by crawlers that go out and basically crawl everything on the web.

Particularly for the visual ones, they look for areas where pictures have been tagged so that those tags can be associated with the picture for training purposes—tagged with contents. Those tools are just getting smarter as the internet gets smarter but they do prune them because the internet is full of a lot of negative stuff.

The biggest problem they had when they first did them is that often the images would skew sexual because there's so much sexual content on the internet. They had to adjust training to remove the sexual stuff, and they had to decide what they were going to train it with, but it was still trained with billions of bits of data.

If you want to think about it in the simplest terms, it's saying, when I'm asked to do this, in the case of writing an article, the most common, most average, if you will, the most typical way that paper looks is this, and then it writes the paper. It's never going to be like original research or original innovation. It can only spit back what humans have trained it.

That's kind of what they're struggling with now, and ethicists are struggling with what do you train it with? I mean, the reality is that humans are sexual insects as a part of our being, so is it artificial to remove all that in the training and to bias it against sexual content?

There are a lot of ethicists involved in this debate, and lots of people are sort of trying to figure it out because it's a quasi-human entity that we don't quite know how to handle yet. I mean, if it's having intelligent conversations with us or appears to, and it's writing these amazing articles and creating these believable photos, that's pretty powerful in terms of what it can do. I think there's a whole other conversation about what this means when images don't represent the photographic evidence it used to be called. Now, that's gone. What takes its place?

And that's kind of where I think CertifyIt comes in as we're trying to own the spot that was originally owned by original cameras for proving things by making sure that the video can't be tampered with. It can be used for everything from reviews. I mentioned the problem with fake reviews.

It can be used by news organizations to ensure that the video that they're getting is accurate. What I mean by that is the big problem now is they can't break the story when they get the video because they have to vet the video to make sure that it's real.

In this case, if it comes from CertifyIt, they'll know that it hasn't been tampered with and couldn't have been altered, and that the location is accurate, and that the date and time is accurate. They can basically put it up right away. That's something that news organizations are struggling with is how can we break news faster than it happens on the internet.

Back in 2011, there was a Family Guy skit with Tom Tucker, the news anchor, saying, “This is Tom Tucker reporting news four hours after you read about it on the internet.” And that was in 2011.

News organizations are really struggling with the fact that more and more of their source content is going to be consumer-generated mobile videos now witnessing different things. And the problem with that is that it's Pandora's box of what you're going to get when you open it up to consumer video.

When CNN had iReport, which they launched in 2006, it was a way for you, as a citizen, to file a video report that might air on CNN. That worked great until people started uploading fake stuff and CNN actually reported some fake news on Steve Jobs’ death that he hadn't died. That fake video that was uploaded fooled CNN, and they aired it, and they were wrong. And so then they said, “We can't do this.” Eventually, in 2015, iReport was shut down.

But with a tool like CertifyIt, they can bring it back because they know that all the videos that they're going to get were actually filmed at the time and at the location that they were, and that there's no other content edited into them because they're original.

That just saves a ton of time for the news reporting organizations because they're not vetted.

Let's talk a little bit about the product and how it works because I can see some huge benefits, but also some barriers to entry, barriers to usage. Let's kind of talk about some of that.

Yeah.

What does the product actually do to help prevent the fake video from spreading?

It basically says the only way to know for sure is if we create the video in the app and control it going forward. There's no loose fodder, no loose video that can be combined or edited into other videos and be used to create fake videos and fake news.

Think of it as like your camera app but the difference is when you record, it's not going into your camera roll. It's going up onto our server and then it's streaming, and then we can check the metadata.

For example, if you say you're in a certain timezone but we know your location and we know that that timezone is different from the one you're saying, then we'll correct it to be the actual timezone. We can do things like that.

Basically, it's just a way for us to know that the video went straight from its creator to being hosted with no way to edit or change it. All the app does is record the video. It lets you record clips so you can edit them into a story and then it forces you to pin the video to the map within 150 meters of where you are to allow for some distortion.

And then it creates a link and opens a sharing window and you can text that link, you can post it, you can email it, you can put it on social media, you can do whatever you want with it in that link.

If someone—let’s say you have a contractor working on a summer home up in Tahoe and you can't get up there to check on them all the time, you could ask them to just record an end-of-day report with the app and it would show they're there and this work was actually done today. It's a big file but it's not being emailed because it's already on the server, so all it is is a link to stream it.

We just kind of made it really easy to record and share videos that haven't been tampered with and can't be tampered with.

I can see benefits of things like that outside of news and whatnot, even when you're talking about the contractor of tow truck drivers.

Before I tow a vehicle, I'm going to walk around the outside of it showing what dings or scratches or dents are or are not there, absolutely, and do the same thing when you take it out of my tow yard so I have kind of indisputable proof that the dings weren't there. He decided on your car while it was in my lot, that sort of thing.

It sounds like there are a lot of interesting uses but does it also rely on the kind of an explicit trust in your organization that your certification is true?

Yeah. That's a good point. And that's why we set up the company as a public benefit corporation. We're a Delaware C-Corp, which is the standard Corporation format for companies that want to go public. There are lots of reasons why companies incorporate in Delaware that we're going to have to go into.

Benefit Corp is sort of like you commit to its fundamental operating principles that are about fairness, truth, data protection, and a bunch of other things. The problem with that is it doesn't provide any legal coverage from shareholders because, ultimately, a C-Corp has a sole duty, which is to maximize shareholder value although that’s sort of purist. The business philosophy is changing now and businesses are more than just about maximizing shareholder value. I think that's becoming common.

Setting ourselves up as a Delaware public benefit corporation is a new form of legal entity. I think it was created maybe seven years ago that gives you all the benefits of a C-Corp but it allows you to be mission-driven and put that mission into your bylaws. And then you have to file a report every two years of your progress toward that mission.

It's kind of a corporation with a conscience by force in that you've literally elected to incorporate as a public benefit corporation, which means you now are duty-bound to achieve your social objectives, which are in our bylaws, which are to promote the spread of accurate, truthful information and to fight fakery online.

It really is a solution. I've tried to get hackers to work away. It's such a simple concept but it literally works. You just upload the video and stream it from a link, and no one can tamper with it.

We're already seeing it with news organizations willing to air video and that desire to be first to publicly disseminate the information. They're still going to be willing to air stuff because they want to be first. Whether with CertifyIt or a competitor platform, they still aren't going to want to jump through the hoops of verifying historics before they run with it because they want to be first.

Yeah. That's it. You nailed it there. That's kind of their dilemma. They want to break the news as quickly as they can, but they want to be factual and they have to relax either breaking it quickly or knowing that it's factual, one of those two in order to get it out.

The thinking with CertifyIt is that you remove the uncertainty about the origin of the video, or its provenance, or whether it's been faked or not. -Peter Sisson Share on XThe thinking with CertifyIt is that you remove the uncertainty about the origin of the video, or its provenance, or whether it's been faked or not. If you're getting people to use it, you as an organization that uses it will be able to break the news faster because you won't have to vet it.

With every other piece of video you get, you have to vet it. You have to figure out where it came from and if it was tampered with is sort of commonly accepted. The challenge here is one, there was a behavior change involved in that. It's my behavior and me getting someone else to change their behavior.

Now, with my contractor, I say, “Hey. Could you use this app, CertifyIt, to give me a progress update every day or every week?” It's about me pushing it onto them because they have to change their behavior to use it. That's a battle. That's the hard go-to-market strategy when you've got to change end-consumer behavior.

The way we're looking at it is initially going to the travel sites like booking.com, TripAdvisor, and Travelocity—they still exist, all of these booking sites—and offering a level of review that's a certified review because fake reviews are a big problem now. You can hire companies in China to write all these great reviews. Your competitors will come in and write nasty reviews and it's all kind of screwed up because nothing is checked.

I think we can pitch these firms, “You should have a level of review that's CertifyIt that guarantees that the person was actually at that location before they can submit a review.” And that's what our app can do because the app doesn't let you post unless you reveal your location.

There could just be a step in the review process where you first submit a short video that proves they're there, or they could even move to support video reviews which I know is of interest to some of them.

That's our plan is to go with these big partners who then make the tool available to their end users. If I'm on eBay and I want to buy something, but I'm not sure if it works as promised, then I can ask the seller, like I would want eBay to have a link there to install the CertifyIt app and just suggest if you’re not sure, have the seller send you a CertifyIt video of the product to be sure they have it in stock and that it works as promised, something like that.

I think that's how we're going to try and do it through partnering with these organizations that are facing these problems. And from the point of view of someone like booking.com, or TripAdvisor, then they've kind of given their customers a tool to protect themselves, but it sort of takes the burden off of them, potentially, a little bit, because right now, I think they often just eat it. If someone gets scammed, they take it. The person gets reimbursed and they eat the cost.

I think there'd be a business argument for these firms to support more accurate reviews because that's the business they're in. They're supposed to be providing their review sites and their fundamental core product is broken, and that can be easily faked. I think they're going to want to look at something like us to help solve that problem.

I can definitely see benefiting companies like your short-term rentals, your Airbnbs and whatnot, where the host has to upload a certified video of the location as opposed to, “Hey, I went out on a stock photo place, grabbed 15 stock photos and uploaded it claiming to be a luxury condo when it's a little hole in the wall with cockroaches everywhere.”

That happens.

Yeah.

Absolutely. I mean, I checked into a hotel in Italy that was nothing like its pictures. It was just a totally fake profile.

It was amazing to me that there was no way to prevent that from happening but this would have prevented that. If you say I'm only going to go to places where they can provide the CertifyIt video to prove it's real, then you have a better chance of having a successful vacation. The travel use cases are big. I think if we can get the news organizations to sort of promote it collectively as an independent third-party service that's a public benefit corporation.

I think people won't trust the vetting if it's done by the news organization itself because everybody believes that news organizations have agendas, which they do. If we could get everybody to use CertifyIt as sort of the gold standard for verified video, then I could just see it growing in all sorts of industries—everything from insurance claims, to dating, getting someone to actually send a video of what they actually look like before you meet them.

There are all sorts of places where people get fooled online and this is designed to be a tool to prevent that.

I think we're kind of in this world now where we have citizen media, so to speak. Your traditional media channels aren't even involved anymore. Bob goes outside, sees something, films it, and uploads it to his favorite social media channel and things just take off from there.

There is no certifying organization. There is no news channel doing due diligence. I either trust Bob or I don't, and I'm either going to share his content or I'm not going to share it. At some point, people kind of do that assumption of like, “Well, 45 other people have shared it. It must be true. Let me turn around and share it as well.”

If you're losing these organizations, which in theory should be doing their due diligence to citizen journalism, they're already struggling. Your news stations are already struggling just to keep up with what's going on and then now they're literally bypassed.

Is it that we as citizens and consumers of media need to, say, have a higher standard in some sense when we've kind of proven that we're really bad at standards ourselves?

Yeah. You're right. I mean we've got to stop believing what we see. And that's really hard because our brains are trained to believe what they see. Seeing is believing. We're all taught that. Subconsciously, when you see things, they register.

We've got to stop believing what we see. And that's really hard because our brains are trained to believe what they see. Seeing is believing. We're all taught that. Subconsciously, when you see things, they register. -Peter Sisson Share on XThe fact that you can make videos that convincingly show someone doing something they never did, or something happening, that never happened, you can trick people's brains pretty easily, because if they're just on autopilot and the video scrolls by and they watch it, they'll believe it. It's kind of osmosis into their brains even if they're not really paying attention.

As you said, which I think is true, news organizations are struggling because they can't keep up with social media, which is where most people get their news now. Even if the news organizations don't make it, the consumer can sort of say, “I saw this video that somebody's sharing, but it could be fake. I'm not going to believe that any video is real unless it's a CertifyIt video.”

It sort of like wouldn't require a new awareness that the tool exists and someone having been exposed to the problem through online scams or something for them to probably take it seriously, but I think that those wake-up calls are bad. People are getting scammed or fooled with this new generative AI to create fake videos and images and articles.

I think people are going to get fooled more and more, and then they're going to start looking for a tool they can use to sort of guarantee to get a video that they can be absolutely sure is real.

I think people are going to get fooled more and more, and then they're going to start looking for a tool they can use to sort of guarantee to get a video that they can be absolutely sure is real. -Peter Sisson Share on XIt's a weird product to bring to market because you've got to sort of bring it to market by convincing people to ask other people to use it.

Yeah. We haven't really seen substantial, fake videos where there were significant consequences. Everyone can look at the voice and body double of an actor that they've layered on AI-generated content, but it's fun and entertaining, not malicious.

The stuff that I've seen that is “malicious,” you spend a couple of minutes looking at it and you see facial tint changing every couple of frames and the person only looks straight into the camera and never turns. There are a lot of tells in the current AI stuff.

Is it going to take some major politicians coming out very convincing that they've done something particularly horrific?

Probably.

There's only this one video source that can corroborate it and then the ones that can't corroborate it.

Yeah. There'll be false negatives and false positives so there could be videos where they create them visiting people in a home for the elderly, but he never actually did it. He just generated the video.

There's that kind of fakery, as well as just the fraud where you're just outright lying. And because a lot of people don't fact-check, once they've picked who's going to tell them their news, they just believe it and we get these silos like we have.

There are always going to be problems with fakery. What we're trying to do with CertifyIt is at least to say that you were solving just one little problem, which is like if you really need a video to prove something happened, to prove something was finished, to prove something is the way it is that you can't just ask for a normal video, particularly from strangers because they can upload anything. They can make anything happen.

If you're dealing with a situation where there's low trust and you need to verify something remotely, that's really where CertifyIt comes in as a really handy tool.

You're on the bleeding edge of a problem that is just beginning to start to happen.

That's right. It's only because I have a background in AI and I've been following these technologies and seeing where they're headed that I realize this is actually happening now.

The year 2022, I think, is going to be known as sort of the year that AI really started to tip into the mainstream. I mean, all the social channels are filled up with photos generated with these image generators that are so entertaining, but so completely unreal.

Dog, strawberries, and things like that.

Absolutely! You can make anything and it's so cool. A dog, Petunia, and it'll make a Petunia out of dog fur. It's just crazy.

It is a little early now in terms of what people can pretty much tell, but just at the rate at which technology evolves and improves, from these technologies, we're kind of at this tipping point, like I said.

I think in five years, generative AI is going to be able to produce things that you can't tell whether it's real or not.

I think in five years, generative AI is going to be able to produce things that you can't tell whether it's real or not. -Peter Sisson Share on XYeah. “Hey, Mr. AI. Produce a video of Peter talking about cats that he loves when he actually hates cats.” That sort of thing.

Right. He's a dog guy. Exactly. Put that all on social media. Peter's a cat guy. I mean, it's just so easy.

For a while, DALL·E, an OpenAI restricted the uploading of faces so that you couldn't make people do things they never did, but then that was no fun because that's what people wanted to do most to fuck with their friends. Pardon my French.

Only a few months later, they said, “We're going to allow you to upload photos.” So now I can upload a photo and put myself in a fancy hat on the […] Goshen Parish, whatever I want to be doing. I can upload my photo and then make that all happen around me. I just described where I want to be and what I want to be doing, and it'll make it.

That's kind of scary on the fraud front because people can create these personas of, “Hey, I'm this world traveler when I've never left the city I grew up in. I've got all this footage.”

There’s so much content.

So much content of me all over the world, yet it's all entirely generated.

Yeah. They could live artificial lives. We've heard of those sorts of long-haul frauds where people may be living a whole life that's built on fraud.

It's going to be possible to create these alternate realities that are really convincing, and it really is going to be up to consumers of video to step back and say, “Wait a minute. How do I know this is real?” And I think that extra step of saying, “Wait a minute. Seeing is no longer believing.” I think people are getting wiser to that. Certainly, they recognize that photos are easy to fake.

It's going to be possible to create these alternate realities that are really convincing, and it really is going to be up to consumers of video to step back and say, “Wait a minute. How do I know this is real?” -Peter Sisson Share on XWhen it comes to video, I don't think they realize how close we are to generative AI video where you can set the scene and say what you want to happen and it all plays out.

Yeah. As soon as that happens to a major politician, we'll all be very aware that it can happen.

Yeah, exactly. And then everyone will raise the therm, be up in arms, and change will happen. But yeah, you're right. It will probably take some major fraud or factual blunder by major news or something that sort of wakes people up to this.

Hopefully consumers can be ahead of the curve and be asking for solutions before. Starting to think about the consequences before this and just have that seed of how I know this is real when we're encountering content, particularly if it's sensationalized.

Yes, exactly. And because content always seems to have an agenda now, content is always designed to build the point of view of whoever’s sharing it and it's really easy to sculpt as well as just cherry-pick content to tell the story that you want.

It's really about consumers taking the extra step to verify things if it's important to them. And that’s what CertifyIt is trying to do, is to be one of those tools that you can say, “OK, I need to see that something happened far away from me, and the person is just going to show me something I don't know anything about. I want to be 100% sure that this happened.”

If the person has an iOS—because currently, we’re on iOS right now—you just say, “Hey, can you install this little app and send me a video? The app is really simple. It just takes your phone number, not a lot of sign-up stuff.” And boom. You've got a tool that you can ask remote people to use to prove something, or even for your kids to prove where they are.

As we wrap up here, where could people find more about the app and more about you?

The app is CertifyIt. It's only on iOS now so if you have an iPhone, you go on the App Store and you search on CertifyIt—one word. You’ll see us. There are others in there with similar names, but you'll see CertifyIt.

Then we also have our website. If you want to learn more about us, the company, and me, you can go to certifyit.org which is our main website.

And we will make sure to link those in the show notes. Any parting wisdom before we wrap up today?

No. I just wish everyone a wonderful, happy holiday season. And if you're not sure of something, be sure to get through with CertifyIt or other tools that are out there.

Great. Thank you so much for coming on the podcast today, Peter.

It’s my pleasure.

Leave a Reply